Tutorial 1: Basics

Official LightAutoML github repository is here

In this tutorial you will learn how to: * run LightAutoML training on tabular data * obtain feature importances and reports * configure resource usage in LightAutoML

0. Prerequisites

0.0. install LightAutoML

[1]:

#!pip install -U lightautoml

0.1. Import libraries

Here we will import the libraries we use in this kernel: - Standard python libraries for timing, working with OS and HTTP requests etc. - Essential python DS libraries like numpy, pandas, scikit-learn and torch (the last we will use in the next cell) - LightAutoML modules: presets for AutoML, task and report generation module

[2]:

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

[3]:

# Standard python libraries

import os

import requests

# Essential DS libraries

import numpy as np

import pandas as pd

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import train_test_split

import torch

# LightAutoML presets, task and report generation

from lightautoml.automl.presets.tabular_presets import TabularAutoML, TabularUtilizedAutoML

from lightautoml.tasks import Task

from lightautoml.report.report_deco import ReportDeco

0.2. Constants

Here we setup some parameters to use in the kernel: - N_THREADS - number of vCPUs for LightAutoML model creation - N_FOLDS - number of folds in LightAutoML inner CV - RANDOM_STATE - random seed for better reproducibility - TEST_SIZE - houldout data part size - TIMEOUT - limit in seconds for model to train - TARGET_NAME - target column name in dataset

[4]:

N_THREADS = 4

N_FOLDS = 5

RANDOM_STATE = 42

TEST_SIZE = 0.2

TIMEOUT = 300

TARGET_NAME = 'TARGET'

[5]:

DATASET_DIR = '../data/'

DATASET_NAME = 'sampled_app_train.csv'

DATASET_FULLNAME = os.path.join(DATASET_DIR, DATASET_NAME)

DATASET_URL = 'https://raw.githubusercontent.com/AILab-MLTools/LightAutoML/master/examples/data/sampled_app_train.csv'

0.3. Imported models setup

For better reproducibility fix numpy random seed with max number of threads for Torch (which usually try to use all the threads on server):

[6]:

np.random.seed(RANDOM_STATE)

torch.set_num_threads(N_THREADS)

0.4. Data loading

Let’s check the data we have:

[7]:

if not os.path.exists(DATASET_FULLNAME):

os.makedirs(DATASET_DIR, exist_ok=True)

dataset = requests.get(DATASET_URL).text

with open(DATASET_FULLNAME, 'w') as output:

output.write(dataset)

[8]:

data = pd.read_csv(DATASET_DIR + DATASET_NAME)

data.head()

[8]:

| SK_ID_CURR | TARGET | NAME_CONTRACT_TYPE | CODE_GENDER | FLAG_OWN_CAR | FLAG_OWN_REALTY | CNT_CHILDREN | AMT_INCOME_TOTAL | AMT_CREDIT | AMT_ANNUITY | ... | FLAG_DOCUMENT_18 | FLAG_DOCUMENT_19 | FLAG_DOCUMENT_20 | FLAG_DOCUMENT_21 | AMT_REQ_CREDIT_BUREAU_HOUR | AMT_REQ_CREDIT_BUREAU_DAY | AMT_REQ_CREDIT_BUREAU_WEEK | AMT_REQ_CREDIT_BUREAU_MON | AMT_REQ_CREDIT_BUREAU_QRT | AMT_REQ_CREDIT_BUREAU_YEAR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 313802 | 0 | Cash loans | M | N | Y | 0 | 270000.0 | 327024.0 | 15372.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 1 | 319656 | 0 | Cash loans | F | N | N | 0 | 108000.0 | 675000.0 | 19737.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2 | 207678 | 0 | Revolving loans | F | Y | Y | 2 | 112500.0 | 270000.0 | 13500.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 3 | 381593 | 0 | Cash loans | F | N | N | 1 | 67500.0 | 142200.0 | 9630.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 4.0 |

| 4 | 258153 | 0 | Cash loans | F | Y | Y | 0 | 337500.0 | 1483231.5 | 46570.5 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 2.0 | 0.0 | 0.0 |

5 rows × 122 columns

[9]:

data.shape

[9]:

(10000, 122)

0.5. Data splitting for train-holdout

As we have only one file with target values, we can split it into 80%-20% for holdout usage:

[10]:

train_data, test_data = train_test_split(

data,

test_size=TEST_SIZE,

stratify=data[TARGET_NAME],

random_state=RANDOM_STATE

)

print(f'Data is splitted. Parts sizes: train_data = {train_data.shape}, test_data = {test_data.shape}')

train_data.head()

Data is splitted. Parts sizes: train_data = (8000, 122), test_data = (2000, 122)

[10]:

| SK_ID_CURR | TARGET | NAME_CONTRACT_TYPE | CODE_GENDER | FLAG_OWN_CAR | FLAG_OWN_REALTY | CNT_CHILDREN | AMT_INCOME_TOTAL | AMT_CREDIT | AMT_ANNUITY | ... | FLAG_DOCUMENT_18 | FLAG_DOCUMENT_19 | FLAG_DOCUMENT_20 | FLAG_DOCUMENT_21 | AMT_REQ_CREDIT_BUREAU_HOUR | AMT_REQ_CREDIT_BUREAU_DAY | AMT_REQ_CREDIT_BUREAU_WEEK | AMT_REQ_CREDIT_BUREAU_MON | AMT_REQ_CREDIT_BUREAU_QRT | AMT_REQ_CREDIT_BUREAU_YEAR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6444 | 112261 | 0 | Cash loans | F | N | N | 1 | 90000.0 | 640080.0 | 31261.5 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

| 3586 | 115058 | 0 | Cash loans | F | N | Y | 0 | 180000.0 | 239850.0 | 23850.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.0 |

| 9349 | 326623 | 0 | Cash loans | F | N | Y | 0 | 112500.0 | 337500.0 | 31086.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 |

| 7734 | 191976 | 0 | Cash loans | M | Y | Y | 1 | 67500.0 | 135000.0 | 9018.0 | ... | 0 | 0 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN |

| 2174 | 281519 | 0 | Revolving loans | F | N | Y | 0 | 67500.0 | 202500.0 | 10125.0 | ... | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 |

5 rows × 122 columns

Note: missing values (NaN and other) in the data should be left as is, unless the reason for their presence or their specific meaning are known. Otherwise, AutoML model will perceive the filled NaNs as a true pattern between the data and the target variable, without knowledge and assumptions about missing values, which can negatively affect the model quality. LighAutoML can deal with missing values and outliers automatically.

1. Task definition

1.1. Task type

First we need to create Task object - the class to setup what task LightAutoML model should solve with specific loss and metric if necessary (more info can be found here in our documentation).

The following task types are available:

'binary'- for binary classification.'reg’- for regression.‘multiclass’- for multiclass classification.'multi:reg- for multiple regression.'multilabel'- for multi-label classification.

In this example we will consider a binary classification:

[11]:

task = Task('binary')

Note: only logloss loss is available for binary task and it is the default loss. Default metric for binary classification is ROC-AUC. See more info about available and default losses and metrics here.

Depending on the task, you can and shold choose exactly those metrics and losses that you want and need to optimize.

1.2. Feature roles setup

To solve the task, we need to setup columns roles. LightAutoML can automatically define types and roles of data columns, but it is possible to specify it directly through the dictionary parameter roles when training AutoML model (see next section “AutoML training”). Specific roles can be specified using a string with the name (any role can be set like this). So the key in dictionary must be the name of the role, the value must be a list of the names of the corresponding columns in dataset.

The only role you must setup is 'target' role (that is column with target variable obviously), everything else ('drop', 'numeric', 'categorical', 'group', 'weights' etc) is up to user:

[12]:

roles = {

'target': TARGET_NAME,

'drop': ['SK_ID_CURR']

}

You can also optionally specify the following roles:

'numeric'- numerical feature'category'- categorical feature'text'- text data'datetime'- features with date and time'date'- features with date only'group'- features by which the data can be divided into groups and which can be taken into account for group k-fold validation (so the same group is not represented in both testing and training sets)'drop'- features to drop, they will not be used in model building'weights'- object weights for the loss and metric'path'- image file paths (for CV tasks)'treatment'- object group in uplift modelling tasks: treatment or control

Note: role name can be written in any case. Also it is possible to pass individual objects of role classes with specific arguments instead of strings with role names for specific tasks and more optimal pipeline construction (more details).

For example, to set the date role, you can use the DatetimeRole class.

[13]:

#from lightautoml.dataset.roles import DatetimeRole

Different seasonality can be extracted from the data through the seasonality parameter: years ('y'), months ('m'), days ('d'), weekdays ('wd'), hours ('hour'), minutes ('min'), seconds ('sec'), milliseconds ('ms'), nanoseconds ('ns'). This features will be considered as categorical. Another important parameter is base_date. It allows to specify the base date and convert the feature to the distances to this date (set to False by default). Also for

all roles classes there is a force_input parameter, and if it is True, then the corresponding features will pass all further feature selections and won’t be excluded (equals False by default). Also it is always possible to specify data type for all roles using dtype argument.

Here is an example of such a role assignment through a class object for date feature (but there is no such feature in the considered dataset):

[14]:

# roles = {

# DatetimeRole(base_date=False, seasonality=('d', 'wd', 'hour')): 'date_time'

# }

Any role can be set through a class object. Information about specific parameters of specific roles and other datailed information can be found here.

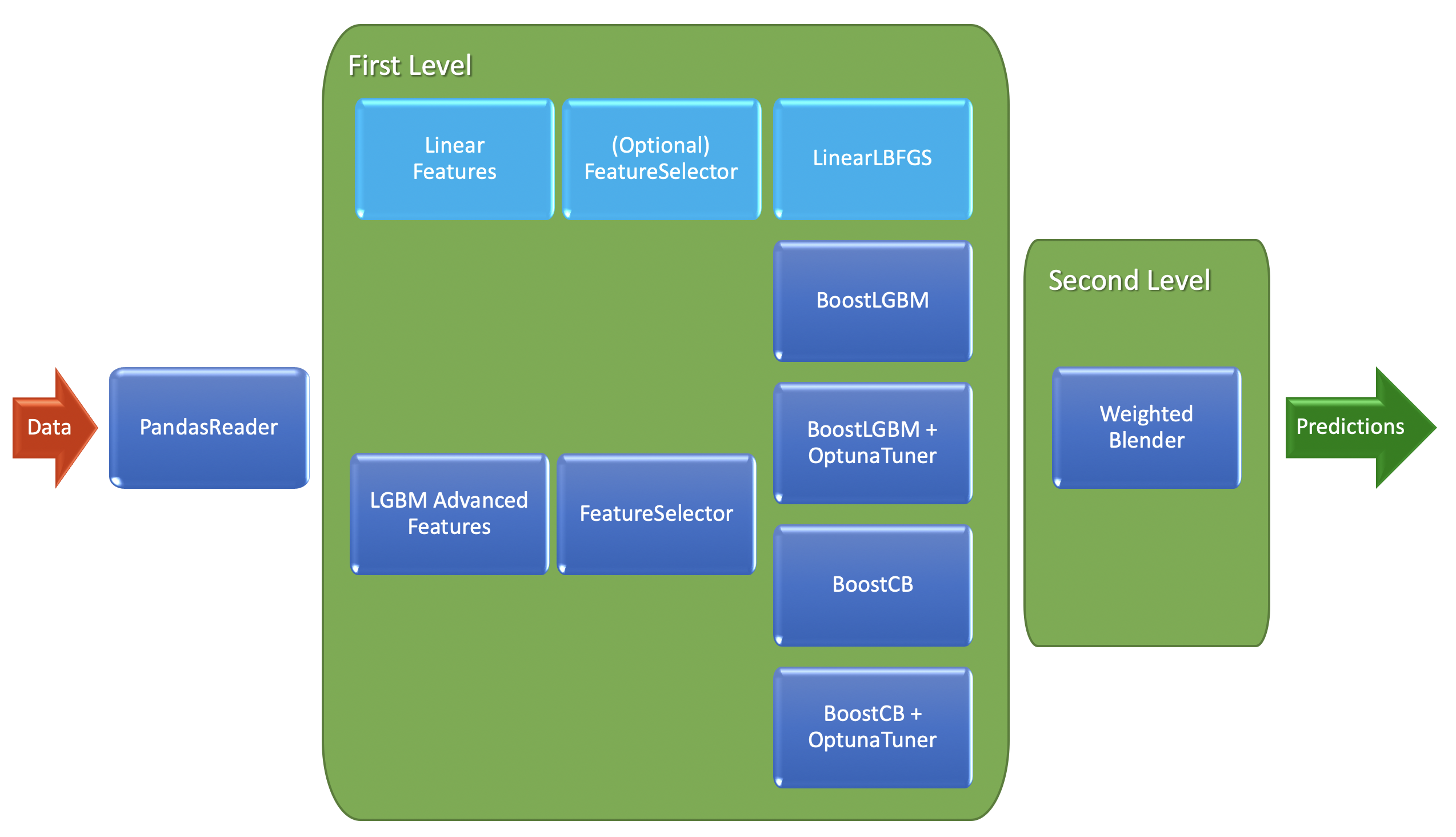

1.3. LightAutoML model creation - TabularAutoML preset

Next we are going to create LightAutoML model with TabularAutoML class - preset with default model structure in just several lines.

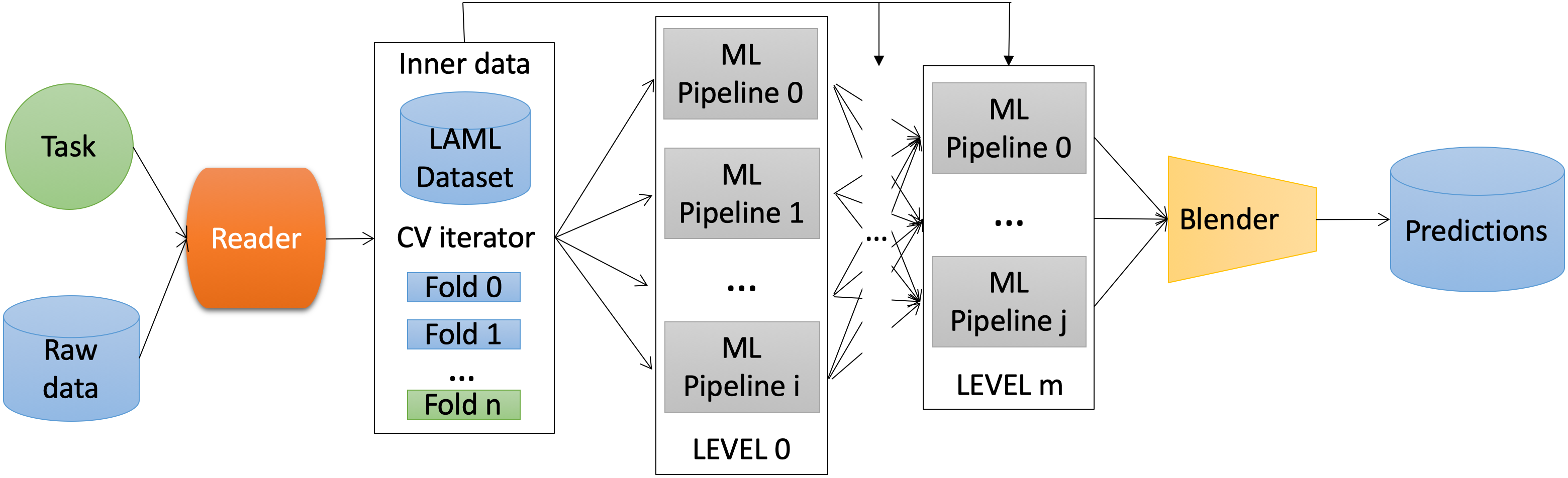

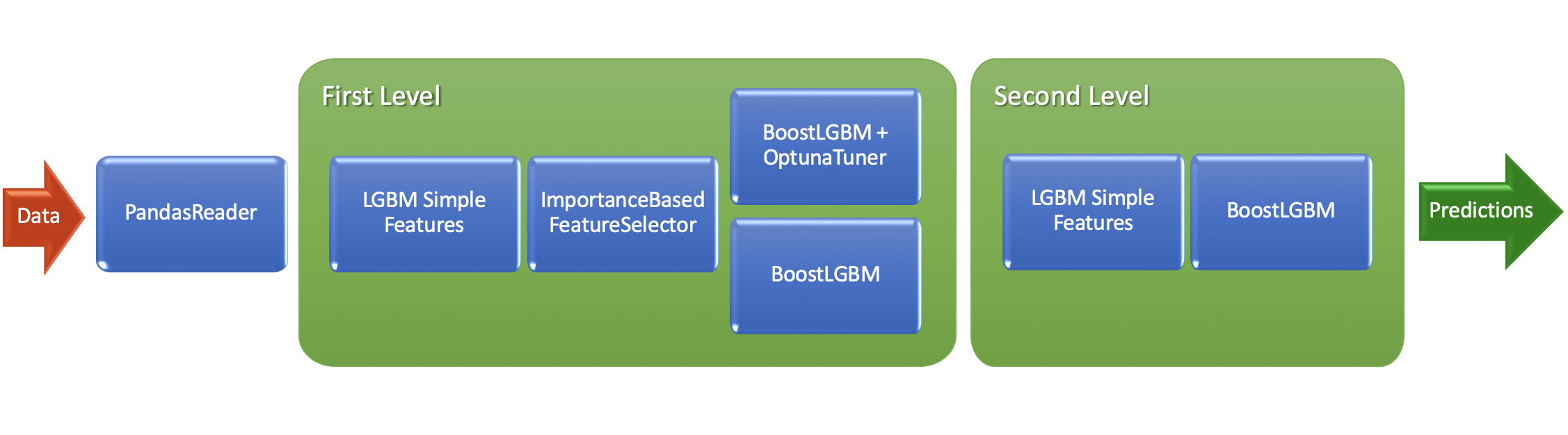

In general, the whole AutoML model consists of multiple levels, which can contain several pipelines with their own set of data processing methods and ML models. The outputs of one level are the inputs of the next, and on the last level predictions of previous level models are combined with blending procedure. All this can be combined into a model using the AutoML class and its various descendants (like TabularAutoML).

Let’s look at how the LightAutoML model is arranged and what it consists in general.

1.3.1 Reader object

First the task and data go into Reader object. It analyzes the data and extracts various valuable information from them. Also it can detect and remove useless features, conduct feature selection, determine types and roles etc. Let’s look at this steps in more detail.

Role and types guessing

Roles can be specified as a string or a specific class object, or defined automatically. For TabularAutoML preset 'numeric', 'datetime' and 'category' roles can be automatically defined. There are two ways of role defining. First is very simple: check if the value can be converted to a date ('datetime'), otherwise check if it can be converted to a number ('numeric'), otherwise declare it a category ('categorical'). But this method may not work well on large data

or when encoding categories with integers. The second method is based on statistics: the distributions of numerical features are considered, and how similar they are to the distributions of real or categorical value. Also different ways of feature encoding (as a number or as a category) are compared and based on normalized Gini index it is decided which encoding is better. For this case a set of specific rules is created, and if at least one of them is fullfilled, then the feature will be

assigned to numerical, otherwise to categorical. This check can be enabled or disabled using the advanced_roles parameter.

If roles are explicitly specified, automatic definition won’t be applied to the specified dataset columns. In the case of specifying a role as an object of a certain class, through its arguments, it is possible to set the processing parameters in more detail.

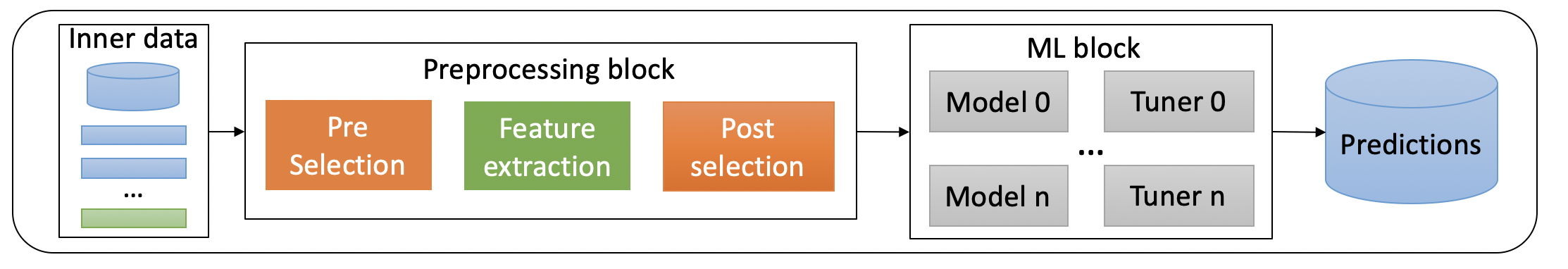

Feature selection

In general, the AutoML pipeline uses pre-selection, generation and post-selection of features. TabularAutoML has no post-selection stage. There are three feature selection methods: its absence, using features importances and more strict selection (forward selection). The GBM model is used to evaluate features importances. Importances can be calculated in 2 ways: based on splits (how many times a split was made for each feature in the entire ensemble) or using permutation feature importances

(mixing feature values during validation and assessing quality change in this case). Second method is harder but it requires holdout data. Then features with importance above a certain threshold are selected. Faster and more strict feature selection method is forward selection. Features are sorted in descending order of importance, then in blocks (size of 1 by default) a model is built based on selected features, and its quality is measured. Then the next block of features is added, and they are

saved if the quality has improved with them, and so on.

Also LightAutoML can merge some columns if it is adequate and leads to an improvement in the model quality (for example, an intersection between categorical variables). Different columns join options are considered, the best one is chosen by the normalized Gini index.

1.3.2 Machine learning pipelines architecture and training

As a result, after analyzing and processing the data, the Reader object forms and returns a LAMA Dataset. It contains the original data and markup with metainformation. In this dataset it is possible to see the roles defined by the Reader object, selected features etc. Then ML pipelines are trained on this data.

Each such pipeline is one or more machine learning algorithms that share one post-processing block and one validation scheme. Several such pipelines can be trained in parallel on one dataset, and they form a level. Number of levels can be unlimited as possible. List of all levels of AutoML pipeline is available via .levels attribute of AutoML instance. Level predictions can be inputs to other models or ML pipelines (i. e. stacking scheme). As inputs for subsequent levels, it is possible

to use the original data by setting skip_conn argument in True when initializing preset instance. At the last level, if there are several pipelines, blending is used to build a prediction.

Different types of features are processed depending on the models. Numerical features are processed for linear model preprocessing: standardization, replacing missing values with median, discretization, log odds (if feature is probability - output of previous level). Categories are processed using label encoding (by default), one hot encoding, ordinal encoding, frequency encoding, out of fold target encoding.

The following algorithms are available in the LightAutoML: linear regression with L2 regularization, LightGBM, CatBoost, random forest.

By default KFold cross-validation is used during training at all levels (for hyperparameter optimization and building out-of-fold prediction during training), and for each algorithm a separate model is built for each validation fold, and their predictions are averaged. So the predictions at each level and the resulting prediction during training are out-of-fold predictions. But it is also possible to just pass a holdout data for validation or use custom cross-validation schemes, setting

cv_iter iterator returning the indices of the objects for validation. LightAutoML has ready-made iterators, for example, TimeSeriesIterator for time series split. To further reduce the effect of overfitting, it is possible to use nested cross-validation (nested_cv parameter), but it is not used by default.

Prediction on new data is the averaging of models over all folds from validation and blending.

Hyperparameter tuning of machine learning algorithms can be performed during training (early stopping by the number of trees in gradient boosting or the number of training epochs of neural networks etc), based on expert rules (according to data characteristics and empirical recommendations, so-called expert parameters), by the sequential model-based optimization (SMBO, bayesian optimization: Optuna with TPESampler) or by grid search. LightGBM and CatBoost can be used with parameter tuning or with expert parameters, with no tuning. For linear regression parameters are always tuned using warm start model training technique.

At the last level blending is used to build a prediction. There are three available blending methods: choosing the best model based on a given metric (other models are just discarded), simple averaging of all models, or weighted averaging (weights are selected using coordinate descent algorithm with optimization of a given metric). TabularAutoML uses the latter strategy by default. It is worth noting that, unlike stacking, blending can exclude models from composition.

1.3.3 Timing

When creating AutoML object, a certain time limit is set, and it schedules a list of tasks that it can complete during this time, and it will initially allocate approximately equal time for each task. In the process of solving objectives, it understands how to adjust the time allocated to different subtasks. If AutoML finished working earlier than set timeout, it means that it completed the entire list of tasks. If AutoML worked to the limit and turned off, then most likely it sacrificed something, for example, reduced the number of algorithms for training, realized that it would not have time to train the next one, or it might not calculate the full cross-validation cycle for one of the models (then on folds, where the model has not trained, the predictiuons will be NaN, and the model related to this fold will not participate in the final averaging). The resulting quality is evaluated at the blending stage, and if necessary and possible, the composition will be corrected.

If you do not set the time for AutoML during initialization, then by default it will be equal to a very large number, that is, sooner or later AutoML will complete all tasks.

1.3.4 LightAutoML model creation

So the entire AutoML pipeline can be composed from various parts by user (see custom pipeline tutorial), but it is possible to use presets - in a certain sense, fixed strategies for dynamic pipeline building.

Here is a default AutoML pipeline for binary classification and regression tasks (TabularAutoML preset):

Another example:

Let’s discuss some of the params we can setup: - task - the type of the ML task (the only must have parameter) - timeout - time limit in seconds for model to train - cpu_limit - vCPU count for model to use - reader_params - parameter change for Reader object inside preset, which works on the first step of data preparation: automatic feature typization, preliminary almost-constant features, correct CV setup etc. For example, we setup n_jobs threads for typization algo,

cv folds and random_state as inside CV seed. - general_params - general parameters dictionary, in which it is possible to specify a list of algorithms used ('use_algos'), nested CV using ('nested_cv') etc.

Important note: reader_params key is one of the YAML config keys, which is used inside TabularAutoML preset. More details on its structure with explanation comments can be found on the link attached. Each key from this config can be modified with user settings during preset object initialization. To get more info about different parameters setting (for example, ML algos which can

be used in general_params->use_algos) please take a look at our article on TowardsDataScience.

Moreover, to receive the automatic report for our model we will use ReportDeco decorator and work with the decorated version in the same way as we do with usual one.

[15]:

automl = TabularAutoML(

task = task,

timeout = TIMEOUT,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE},

)

2. AutoML training

To run autoML training use fit_predict method.

Main arguments:

train_data- dataset to train.roles- column roles dict.verbose- controls the verbosity: the higher, the more messages: <1 : messages are not displayed; >=1 : the computation process for layers is displayed; >=2 : the information about folds processing is also displayed; >=3 : the hyperparameters optimization process is also displayed; >=4 : the training process for every algorithm is displayed;

Note: out-of-fold prediction is calculated during training and returned from the fit_predict method

[16]:

%%time

out_of_fold_predictions = automl.fit_predict(train_data, roles = roles, verbose = 1)

[11:02:15] Stdout logging level is INFO.

[11:02:15] Copying TaskTimer may affect the parent PipelineTimer, so copy will create new unlimited TaskTimer

[11:02:15] Task: binary

[11:02:15] Start automl preset with listed constraints:

[11:02:15] - time: 300.00 seconds

[11:02:15] - CPU: 4 cores

[11:02:15] - memory: 16 GB

[11:02:15] Train data shape: (8000, 122)

[11:02:18] Layer 1 train process start. Time left 297.23 secs

[11:02:18] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:02:21] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = 0.7351175537276247

[11:02:21] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:02:21] Time left 294.29 secs

[11:02:23] Selector_LightGBM fitting and predicting completed

[11:02:24] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:02:38] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = 0.7139016076564749

[11:02:38] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:02:38] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 1.00 secs

[11:02:43] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:02:43] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:02:58] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = 0.6809442140928409

[11:02:58] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:02:58] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:03:03] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = 0.7205654339932637

[11:03:03] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:03:03] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 168.11 secs

[11:04:57] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:04:57] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:05:06] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = 0.7450030072205183

[11:05:06] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:05:06] Time left 129.22 secs

[11:05:06] Layer 1 training completed.

[11:05:06] Blending: optimization starts with equal weights and score 0.747978018908178

[11:05:06] Blending: iteration 0: score = 0.7502378457373473, weights = [0.25709525 0.13884845 0.0858601 0.05886992 0.4593263 ]

[11:05:06] Blending: iteration 1: score = 0.7502116959937104, weights = [0.2473392 0.14313795 0.07531787 0.06068861 0.47351643]

[11:05:06] Blending: iteration 2: score = 0.7502116959937104, weights = [0.2473392 0.14313795 0.07531787 0.06068861 0.47351643]

[11:05:06] Blending: no score update. Terminated

[11:05:06] Automl preset training completed in 171.05 seconds

[11:05:06] Model description:

Final prediction for new objects (level 0) =

0.24734 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.14314 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.07532 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.06069 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.47352 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

CPU times: user 11min 19s, sys: 1min 30s, total: 12min 50s

Wall time: 2min 51s

After training we can see logs with all the progress, final scores, weights assigned to the models in the final prediction etc.

Note that in this fit_predict you receive the model with only 3 out of 5 LightGBM models (you can see that from the log line in the end 0.25685 * (3 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM)) - to fix it you can set the bigger timeout to make LightAutoML train all the models.

3. Prediction on holdout and model evaluation

Now we can use trained AutoML model to build predictions on holdout and evaluate model quality. Note that in case of classification tasks LightAutoML model returns probabilities as predictions.

[17]:

%%time

test_predictions = automl.predict(test_data)

print(f'Prediction for test_data:\n{test_predictions}\nShape = {test_predictions.shape}')

Prediction for test_data:

array([[0.06620218],

[0.06621333],

[0.03255654],

...,

[0.06863909],

[0.04567214],

[0.2046678 ]], dtype=float32)

Shape = (2000, 1)

CPU times: user 3.33 s, sys: 452 ms, total: 3.78 s

Wall time: 646 ms

[18]:

print(f'OOF score: {roc_auc_score(train_data[TARGET_NAME].values, out_of_fold_predictions.data[:, 0])}')

print(f'HOLDOUT score: {roc_auc_score(test_data[TARGET_NAME].values, test_predictions.data[:, 0])}')

OOF score: 0.7502681411720484

HOLDOUT score: 0.7327955163043479

4. Model analysis

4.1. Reports

You can obtain the description of the resulting pipeline:

[19]:

print(automl.create_model_str_desc())

Final prediction for new objects (level 0) =

0.24734 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.14314 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.07532 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.06069 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.47352 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

Also for this purposes LightAutoML have ReportDeco, use it to build detailed reports:

[20]:

RD = ReportDeco(output_path = 'tabularAutoML_model_report')

automl_rd = RD(

TabularAutoML(

task = task,

timeout = TIMEOUT,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE}

)

)

[21]:

%%time

out_of_fold_predictions = automl_rd.fit_predict(train_data, roles = roles, verbose = 1)

[11:05:07] Stdout logging level is INFO.

[11:05:07] Task: binary

[11:05:07] Start automl preset with listed constraints:

[11:05:07] - time: 300.00 seconds

[11:05:07] - CPU: 4 cores

[11:05:07] - memory: 16 GB

[11:05:07] Train data shape: (8000, 122)

[11:05:08] Layer 1 train process start. Time left 299.03 secs

[11:05:09] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:05:11] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = 0.7351175537276247

[11:05:11] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:05:11] Time left 296.09 secs

[11:05:14] Selector_LightGBM fitting and predicting completed

[11:05:14] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:05:28] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = 0.7139016076564749

[11:05:28] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:05:28] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 1.00 secs

[11:05:34] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:05:34] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:05:48] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = 0.6809442140928409

[11:05:48] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:05:48] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:05:53] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = 0.7205654339932637

[11:05:53] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:05:53] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 173.09 secs

[11:07:42] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:07:42] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:07:50] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = 0.7450030072205183

[11:07:50] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:07:50] Time left 137.02 secs

[11:07:50] Layer 1 training completed.

[11:07:50] Blending: optimization starts with equal weights and score 0.747978018908178

[11:07:51] Blending: iteration 0: score = 0.7502378457373473, weights = [0.25709525 0.13884845 0.0858601 0.05886992 0.4593263 ]

[11:07:51] Blending: iteration 1: score = 0.7502116959937104, weights = [0.2473392 0.14313795 0.07531787 0.06068861 0.47351643]

[11:07:51] Blending: iteration 2: score = 0.7502116959937104, weights = [0.2473392 0.14313795 0.07531787 0.06068861 0.47351643]

[11:07:51] Blending: no score update. Terminated

[11:07:51] Automl preset training completed in 163.25 seconds

[11:07:51] Model description:

Final prediction for new objects (level 0) =

0.24734 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.14314 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.07532 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.06069 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.47352 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

CPU times: user 11min 17s, sys: 1min 41s, total: 12min 59s

Wall time: 2min 46s

The report will be available in the folder with specified name (output_path argument in ReportDeco initialization).

[22]:

!ls tabularAutoML_model_report

feature_importance.png test_roc_curve_1.png

lama_interactive_report.html valid_distribution_of_logits.png

test_distribution_of_logits_1.png valid_pie_f1_metric.png

test_pie_f1_metric_1.png valid_pr_curve.png

test_pr_curve_1.png valid_preds_distribution_by_bins.png

test_preds_distribution_by_bins_1.png valid_roc_curve.png

[23]:

%%time

test_predictions = automl_rd.predict(test_data)

print(f'Prediction for test_data:\n{test_predictions}\nShape = {test_predictions.shape}')

Prediction for test_data:

array([[0.06620218],

[0.06621333],

[0.03255654],

...,

[0.06863909],

[0.04567214],

[0.2046678 ]], dtype=float32)

Shape = (2000, 1)

CPU times: user 14.3 s, sys: 9.01 s, total: 23.3 s

Wall time: 2.59 s

[24]:

print(f'OOF score: {roc_auc_score(train_data[TARGET_NAME].values, out_of_fold_predictions.data[:, 0])}')

print(f'HOLDOUT score: {roc_auc_score(test_data[TARGET_NAME].values, test_predictions.data[:, 0])}')

OOF score: 0.7502681411720484

HOLDOUT score: 0.7327955163043479

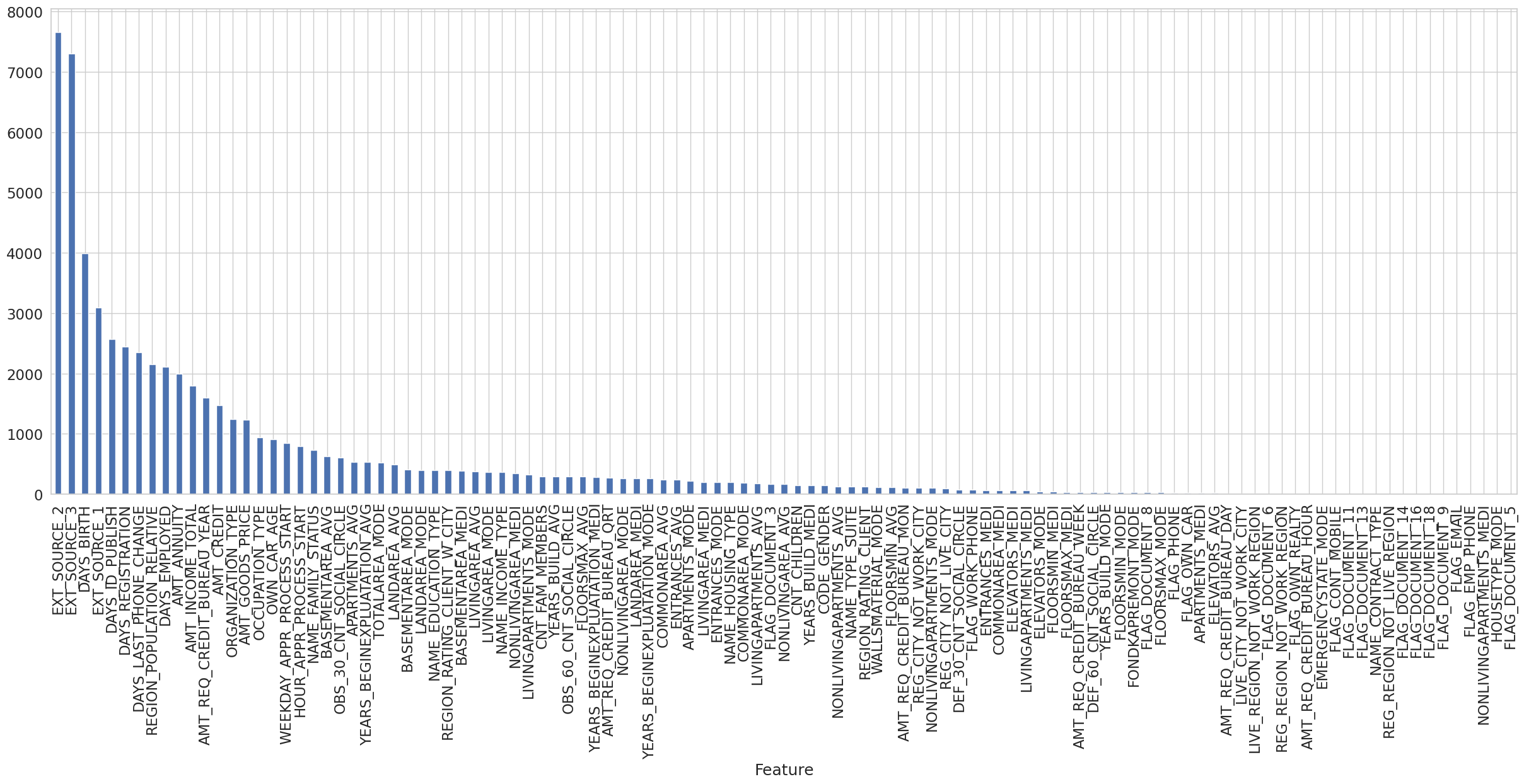

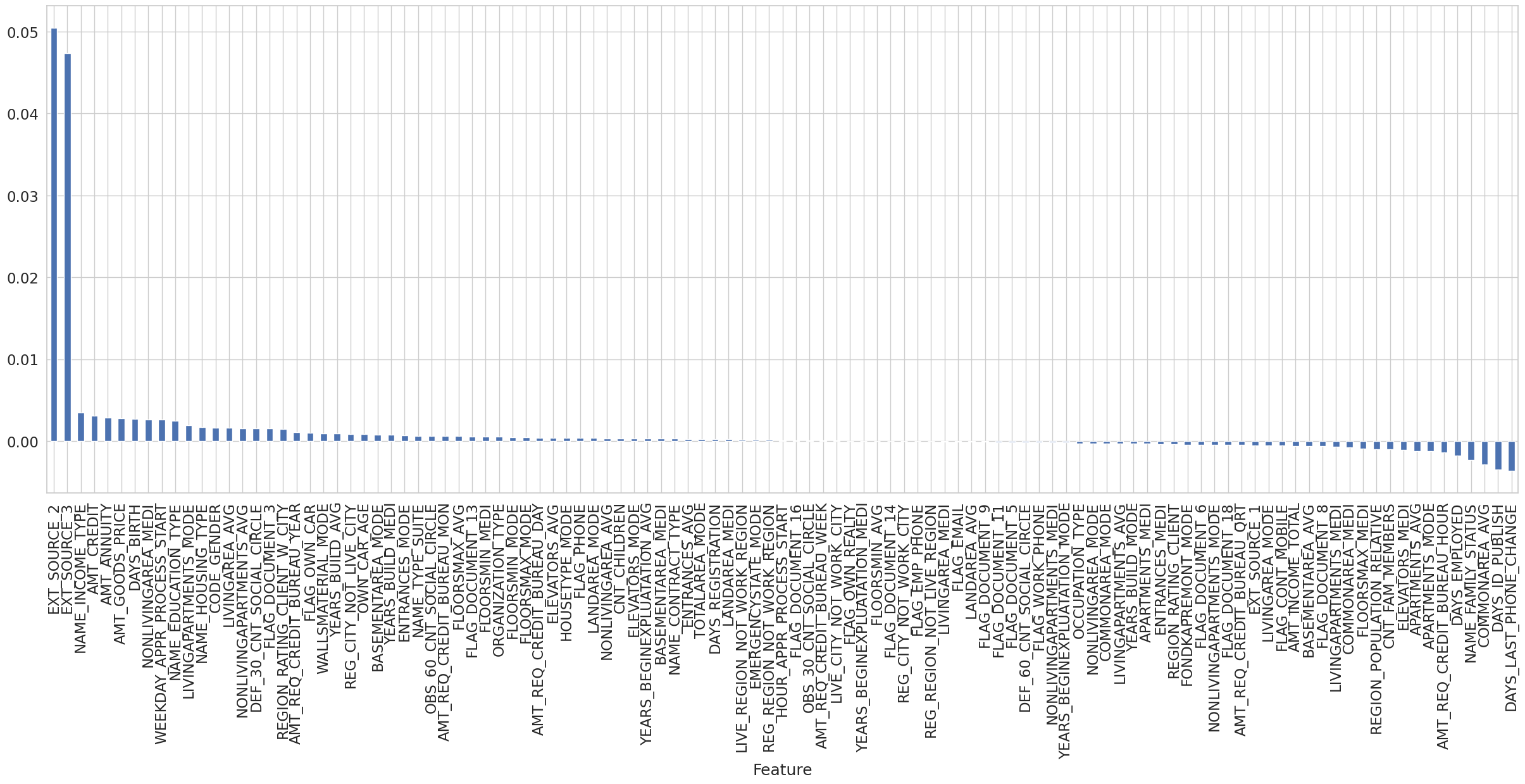

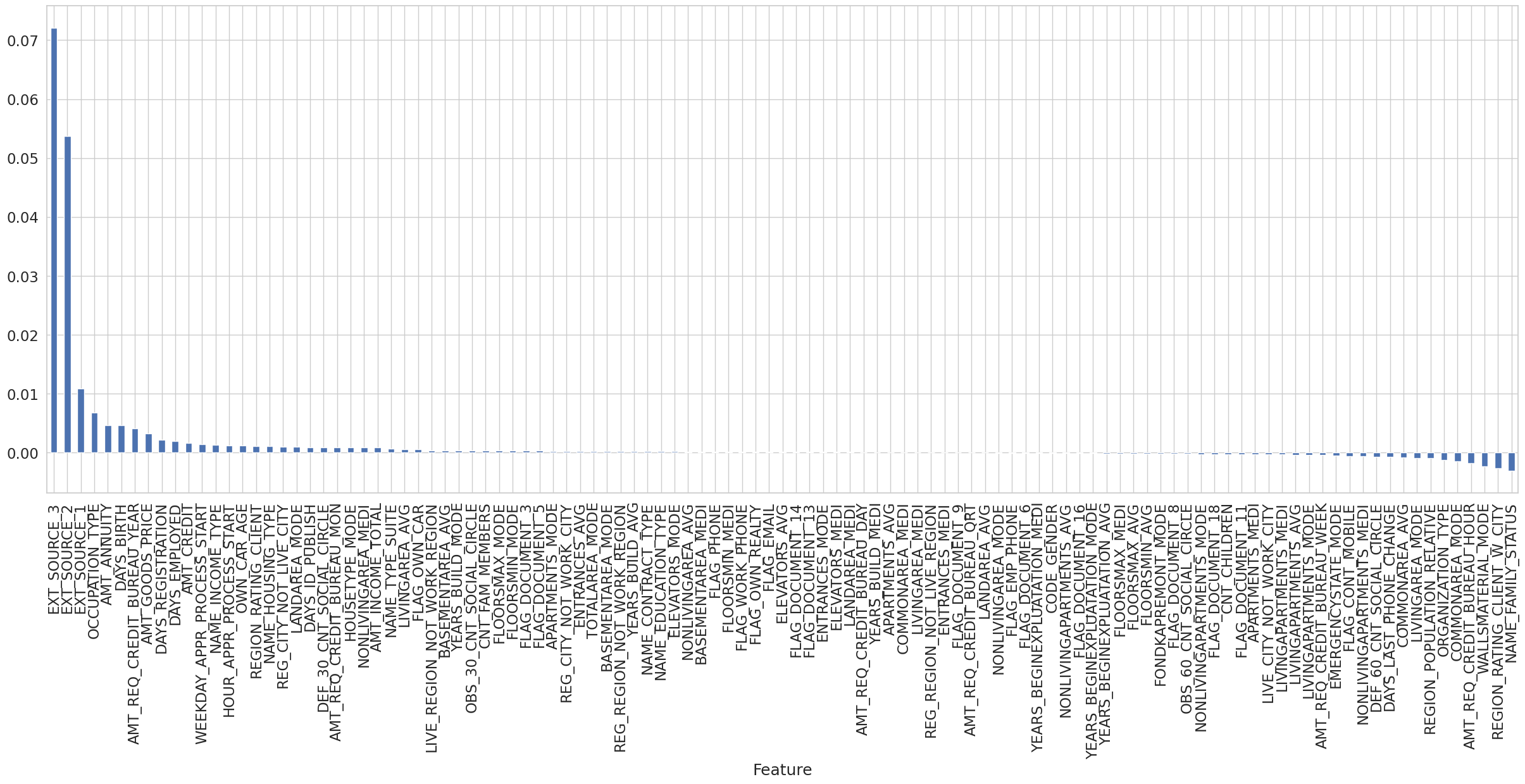

4.2 Feature importances calculation

For feature importances calculation we have 2 different methods in LightAutoML: - Fast (fast) - this method uses feature importances from feature selector LGBM model inside LightAutoML. It works extremely fast and almost always (almost because of situations, when feature selection is turned off or selector was removed from the final models with all GBM models). There is no need to use new labelled data. - Accurate (accurate) - this method calculate features permutation importances for

the whole LightAutoML model based on the new labelled data. It always works but can take a lot of time to finish (depending on the model structure, new labelled dataset size etc.).

In the cell below we will use automl_rd.model instead automl_rd because we want to take the importances from the model, not from the report. But be carefull - everything, which is calculated using automl_rd.model will not go to the report.

[25]:

%%time

# Fast feature importances calculation

fast_fi = automl_rd.model.get_feature_scores('fast')

fast_fi.set_index('Feature')['Importance'].plot.bar(figsize = (30, 10), grid = True)

CPU times: user 275 ms, sys: 117 ms, total: 393 ms

Wall time: 202 ms

[25]:

<Axes: xlabel='Feature'>

[26]:

%%time

# Accurate feature importances calculation with detailed info (Permutation importances) - can take long time to calculate

accurate_fi = automl_rd.model.get_feature_scores('accurate', test_data, silent = True)

CPU times: user 6min 32s, sys: 20.1 s, total: 6min 52s

Wall time: 1min 7s

[27]:

accurate_fi.set_index('Feature')['Importance'].plot.bar(figsize = (30, 10), grid = True)

[27]:

<Axes: xlabel='Feature'>

Bonus: where is the automatic report?

As we used automl_rd in our training and prediction cells, it is already ready in the folder we specified - you can check the output folder and find the tabularAutoML_model_report folder with lama_interactive_report.html report inside (or just click this link for short). It’s interactive so you can click the black triangles on the left of the texts to go deeper in selected part.

5. Spending more from TIMEOUT - TabularUtilizedAutoML usage

To spend (almost) all the TIMEOUT time for building the model we can use TabularUtilizedAutoML preset instead of TabularAutoML, which has the same API. By default TabularUtilizedAutoML model trains with 7 different parameter configurations (see this for more details) sequentially, and if there is time left, then whole AutoML pipeline with these configs is run again with another

cross-validation seed, and so on. Then results for each pipeline model are averaged over the considered validation seeds, and all averaged results at the end are also combined through blending. User can set his own set of configs by passing list of paths to according files in configs_list argument during TabularUtilizedAutoML instance initialization. Such configs allow the user to configure all pipeline parameters and can be used for any available preset.

[28]:

utilized_automl = TabularUtilizedAutoML(

task = task,

timeout = 900,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE},

)

[29]:

%%time

out_of_fold_predictions = utilized_automl.fit_predict(train_data, roles = roles, verbose = 1)

[11:09:09] Start automl utilizator with listed constraints:

[11:09:09] - time: 900.00 seconds

[11:09:09] - CPU: 4 cores

[11:09:09] - memory: 16 GB

[11:09:09] If one preset completes earlier, next preset configuration will be started

[11:09:09] ==================================================

[11:09:09] Start 0 automl preset configuration:

[11:09:09] conf_0_sel_type_0.yml, random state: {'reader_params': {'random_state': 42}, 'nn_params': {'random_state': 42}, 'general_params': {'return_all_predictions': False}}

[11:09:09] Stdout logging level is INFO.

[11:09:09] Task: binary

[11:09:09] Start automl preset with listed constraints:

[11:09:09] - time: 900.00 seconds

[11:09:09] - CPU: 4 cores

[11:09:09] - memory: 16 GB

[11:09:09] Train data shape: (8000, 122)

[11:09:10] Layer 1 train process start. Time left 899.10 secs

[11:09:10] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:09:13] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = 0.7351175537276247

[11:09:13] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:09:13] Time left 896.26 secs

[11:09:13] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:09:30] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = 0.7208359669101568

[11:09:30] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:09:30] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 103.73 secs

[11:11:14] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:11:14] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:11:25] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = 0.703382394929586

[11:11:25] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:11:25] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:11:30] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = 0.7216183332238446

[11:11:30] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:11:30] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 300.00 secs

[11:13:24] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:13:24] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:13:33] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = 0.7486773651008073

[11:13:33] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:13:33] Time left 636.25 secs

[11:13:33] Layer 1 training completed.

[11:13:33] Blending: optimization starts with equal weights and score 0.7510956848883608

[11:13:33] Blending: iteration 0: score = 0.7530033405765996, weights = [0.24248882 0.0901353 0.11209824 0.09796461 0.45731303]

[11:13:33] Blending: iteration 1: score = 0.7530437344895347, weights = [0.23387231 0.08203291 0.10811498 0.12389028 0.45208955]

[11:13:33] Blending: iteration 2: score = 0.7530445848877017, weights = [0.23470336 0.0871055 0.10718196 0.12282111 0.44818804]

[11:13:33] Blending: iteration 3: score = 0.7530445848877017, weights = [0.23470336 0.0871055 0.10718196 0.12282111 0.44818804]

[11:13:33] Blending: no score update. Terminated

[11:13:33] Automl preset training completed in 264.09 seconds

[11:13:33] Model description:

Final prediction for new objects (level 0) =

0.23470 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.08711 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.10718 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.12282 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.44819 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

[11:13:33] ==================================================

[11:13:33] Start 1 automl preset configuration:

[11:13:33] conf_1_sel_type_1.yml, random state: {'reader_params': {'random_state': 43}, 'nn_params': {'random_state': 43}, 'general_params': {'return_all_predictions': False}}

[11:13:33] Stdout logging level is INFO.

[11:13:33] Task: binary

[11:13:33] Start automl preset with listed constraints:

[11:13:33] - time: 635.86 seconds

[11:13:33] - CPU: 4 cores

[11:13:33] - memory: 16 GB

[11:13:33] Train data shape: (8000, 122)

[11:13:34] Layer 1 train process start. Time left 634.90 secs

[11:13:35] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:13:37] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = 0.7342794863339953

[11:13:37] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:13:37] Time left 631.81 secs

[11:13:41] Selector_LightGBM fitting and predicting completed

[11:13:41] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:13:55] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = 0.7392594180002504

[11:13:55] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:13:55] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 81.29 secs

[11:15:19] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:15:19] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:15:22] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = 0.7456401680471817

[11:15:22] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:15:22] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:15:26] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = 0.7142443181177967

[11:15:26] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:15:26] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 300.00 secs

[11:17:43] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:17:43] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:17:50] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = 0.7420738107341084

[11:17:50] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:17:50] Time left 378.85 secs

[11:17:50] Layer 1 training completed.

[11:17:50] Blending: optimization starts with equal weights and score 0.7493590655314701

[11:17:50] Blending: iteration 0: score = 0.7515980576055465, weights = [0.14799137 0.1691257 0.4277942 0. 0.25508872]

[11:17:50] Blending: iteration 1: score = 0.7518406336826982, weights = [0.23282948 0.12138072 0.40569347 0. 0.24009633]

[11:17:50] Blending: iteration 2: score = 0.7518406336826982, weights = [0.23282948 0.12138072 0.40569347 0. 0.24009633]

[11:17:50] Blending: no score update. Terminated

[11:17:50] Automl preset training completed in 257.29 seconds

[11:17:50] Model description:

Final prediction for new objects (level 0) =

0.23283 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.12138 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.40569 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.24010 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

[11:17:50] ==================================================

[11:17:50] Start 2 automl preset configuration:

[11:17:50] conf_2_select_mode_1_no_typ.yml, random state: {'reader_params': {'random_state': 44}, 'nn_params': {'random_state': 44}, 'general_params': {'return_all_predictions': False}}

[11:17:50] Stdout logging level is INFO.

[11:17:50] Task: binary

[11:17:50] Start automl preset with listed constraints:

[11:17:50] - time: 378.53 seconds

[11:17:50] - CPU: 4 cores

[11:17:50] - memory: 16 GB

[11:17:50] Train data shape: (8000, 122)

[11:17:51] Layer 1 train process start. Time left 378.43 secs

[11:17:51] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:17:53] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = 0.7369646185464611

[11:17:53] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:17:53] Time left 375.60 secs

[11:17:57] Selector_LightGBM fitting and predicting completed

[11:17:57] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:18:12] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = 0.7396506011570942

[11:18:12] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:18:12] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 2.40 secs

[11:18:23] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:18:23] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:18:38] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = 0.707420085426748

[11:18:38] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:18:38] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:18:43] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = 0.7208621166537937

[11:18:43] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:18:43] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 222.56 secs

[11:20:48] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:20:48] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:20:54] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = 0.7435819918833748

[11:20:54] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:20:54] Time left 195.02 secs

[11:20:54] Layer 1 training completed.

[11:20:54] Blending: optimization starts with equal weights and score 0.755773406306

[11:20:54] Blending: iteration 0: score = 0.7573862927295848, weights = [0.21980023 0.3309117 0.13515761 0.05808445 0.25604597]

[11:20:54] Blending: iteration 1: score = 0.7574197771574124, weights = [0.22310945 0.3465078 0.10554349 0.06082201 0.26401728]

[11:20:54] Blending: iteration 2: score = 0.7574281748393119, weights = [0.22096376 0.34772167 0.10591323 0.06045922 0.26494217]

[11:20:54] Blending: iteration 3: score = 0.7574281748393119, weights = [0.22096376 0.34772167 0.10591323 0.06045922 0.26494217]

[11:20:54] Blending: no score update. Terminated

[11:20:54] Automl preset training completed in 183.90 seconds

[11:20:54] Model description:

Final prediction for new objects (level 0) =

0.22096 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.34772 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.10591 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.06046 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.26494 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

[11:20:54] ==================================================

[11:20:54] Blending: optimization starts with equal weights and score 0.7584169753080514

[11:20:54] Blending: iteration 0: score = 0.7593946143008483, weights = [0.26784256 0.14028242 0.59187496]

[11:20:54] Blending: iteration 1: score = 0.7594212955433396, weights = [0.26530033 0.14962645 0.58507323]

[11:20:54] Blending: iteration 2: score = 0.7594212955433396, weights = [0.26530033 0.14962645 0.58507323]

[11:20:54] Blending: no score update. Terminated

CPU times: user 46min 55s, sys: 5min 16s, total: 52min 12s

Wall time: 11min 45s

[30]:

print('out_of_fold_predictions:\n{}\nShape = {}'.format(out_of_fold_predictions, out_of_fold_predictions.shape))

out_of_fold_predictions:

array([[0.04793217],

[0.03117672],

[0.03577064],

...,

[0.03144814],

[0.18522368],

[0.11984787]], dtype=float32)

Shape = (8000, 1)

[31]:

print(utilized_automl.create_model_str_desc())

Final prediction for new objects =

0.26530 * 1 averaged models with config = "conf_0_sel_type_0.yml" and different CV random_states. Their structures:

Model #0.

================================================================================

Final prediction for new objects (level 0) =

0.23470 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.08711 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.10718 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.12282 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.44819 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

================================================================================

+ 0.14963 * 1 averaged models with config = "conf_1_sel_type_1.yml" and different CV random_states. Their structures:

Model #0.

================================================================================

Final prediction for new objects (level 0) =

0.23283 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.12138 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.40569 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.24010 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

================================================================================

+ 0.58507 * 1 averaged models with config = "conf_2_select_mode_1_no_typ.yml" and different CV random_states. Their structures:

Model #0.

================================================================================

Final prediction for new objects (level 0) =

0.22096 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.34772 * (5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM) +

0.10591 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.06046 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.26494 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

================================================================================

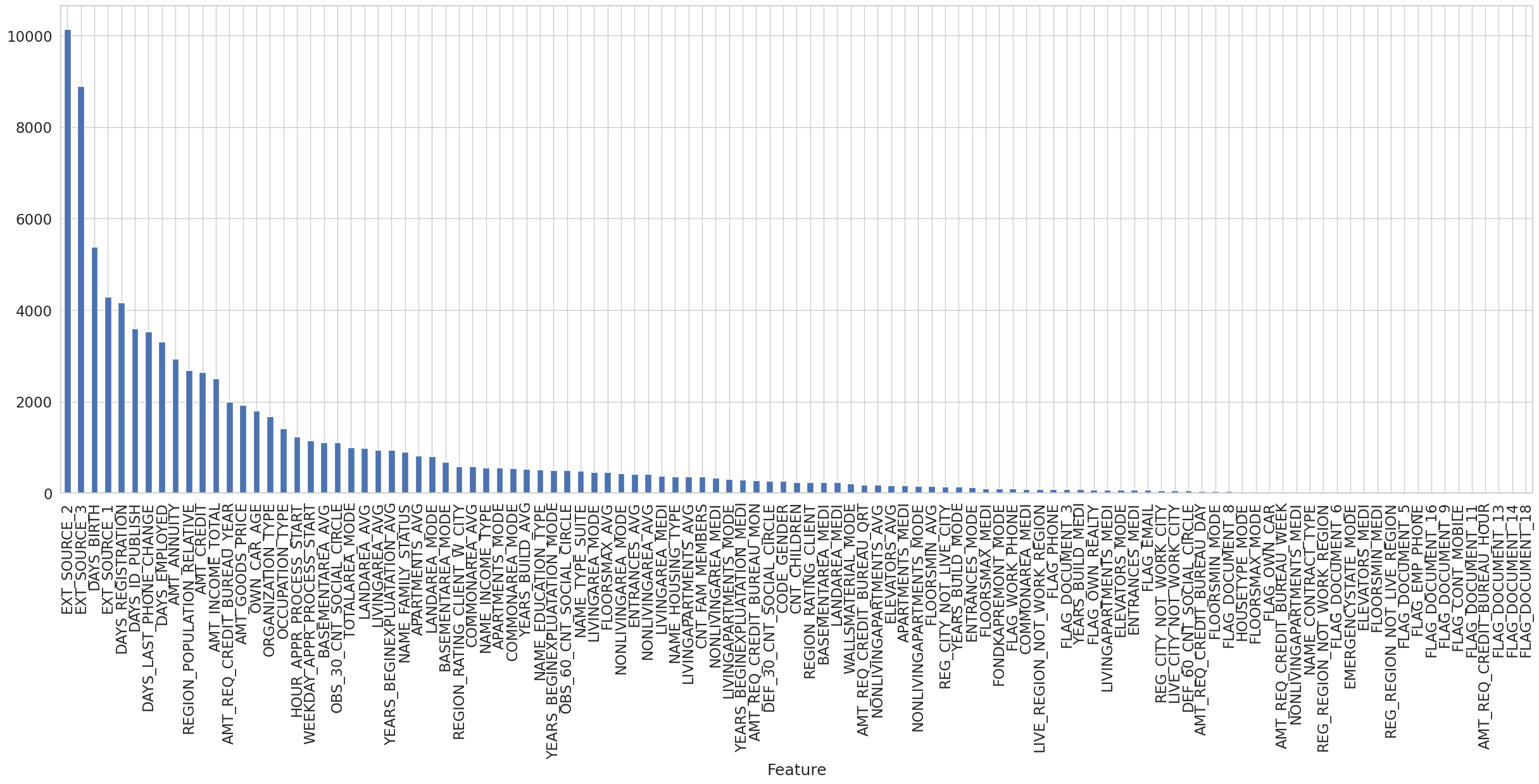

Feature importances calculation for TabularUtilizedAutoML:

[32]:

%%time

# Fast feature importances calculation

fast_fi = utilized_automl.get_feature_scores('fast', silent=False)

fast_fi.set_index('Feature')['Importance'].plot.bar(figsize = (30, 10), grid = True)

CPU times: user 294 ms, sys: 117 ms, total: 411 ms

Wall time: 204 ms

[32]:

<Axes: xlabel='Feature'>

Note that in TabularUtilizedAutoML the first config doesn’t have a LGBM feature selector (but second one already has it), so if there is enough time only for training with the first config, then 'fast' feature importance calculation method won’t work. 'accurate' method will still work correctly.

[33]:

%%time

# Accurate feature importances calculation

fast_fi = utilized_automl.get_feature_scores('accurate', test_data, silent=True)

fast_fi.set_index('Feature')['Importance'].plot.bar(figsize = (30, 10), grid = True)

CPU times: user 16min 44s, sys: 58.4 s, total: 17min 42s

Wall time: 3min 8s

[33]:

<Axes: xlabel='Feature'>

Prediction on holdout and metric calculation:

[34]:

%%time

test_predictions = utilized_automl.predict(test_data)

print(f'Prediction for test_data:\n{test_predictions}\nShape = {test_predictions.shape}')

Prediction for test_data:

array([[0.05962077],

[0.08053684],

[0.03293977],

...,

[0.05790396],

[0.04083381],

[0.21182759]], dtype=float32)

Shape = (2000, 1)

CPU times: user 9.32 s, sys: 386 ms, total: 9.7 s

Wall time: 1.8 s

[35]:

print(f'OOF score: {roc_auc_score(train_data[TARGET_NAME].values, out_of_fold_predictions.data[:, 0])}')

print(f'HOLDOUT score: {roc_auc_score(test_data[TARGET_NAME].values, test_predictions.data[:, 0])}')

OOF score: 0.7594212955433396

HOLDOUT score: 0.7356114130434782

It is also important to note that using a ReportDeco decorator for a TabularUtilizedAutoML is not yet available.

Bonus: another tasks examples

Regression task

Without big differences from the case of binary classification, LightAutoML can solve the regression problems.

Here you will use Ames Housing dataset. Load the data, split it into train and validation parts:

[36]:

data = pd.read_csv('https://raw.githubusercontent.com/reneemarama/aiming_high_in_aimes/master/datasets/train.csv')

data.head()

[36]:

| Id | PID | MS SubClass | MS Zoning | Lot Frontage | Lot Area | Street | Alley | Lot Shape | Land Contour | ... | Screen Porch | Pool Area | Pool QC | Fence | Misc Feature | Misc Val | Mo Sold | Yr Sold | Sale Type | SalePrice | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 109 | 533352170 | 60 | RL | NaN | 13517 | Pave | NaN | IR1 | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 3 | 2010 | WD | 130500 |

| 1 | 544 | 531379050 | 60 | RL | 43.0 | 11492 | Pave | NaN | IR1 | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 4 | 2009 | WD | 220000 |

| 2 | 153 | 535304180 | 20 | RL | 68.0 | 7922 | Pave | NaN | Reg | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 1 | 2010 | WD | 109000 |

| 3 | 318 | 916386060 | 60 | RL | 73.0 | 9802 | Pave | NaN | Reg | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 4 | 2010 | WD | 174000 |

| 4 | 255 | 906425045 | 50 | RL | 82.0 | 14235 | Pave | NaN | IR1 | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 3 | 2010 | WD | 138500 |

5 rows × 81 columns

[37]:

data.shape

[37]:

(2051, 81)

[38]:

train_data, test_data = train_test_split(

data,

test_size=TEST_SIZE,

random_state=RANDOM_STATE

)

print(f'Data is splitted. Parts sizes: train_data = {train_data.shape}, test_data = {test_data.shape}')

train_data.head()

Data is splitted. Parts sizes: train_data = (1640, 81), test_data = (411, 81)

[38]:

| Id | PID | MS SubClass | MS Zoning | Lot Frontage | Lot Area | Street | Alley | Lot Shape | Land Contour | ... | Screen Porch | Pool Area | Pool QC | Fence | Misc Feature | Misc Val | Mo Sold | Yr Sold | Sale Type | SalePrice | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1448 | 452 | 528174050 | 120 | RL | 47.0 | 6904 | Pave | NaN | IR1 | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 8 | 2009 | WD | 213000 |

| 1771 | 1697 | 528110070 | 20 | RL | 110.0 | 14226 | Pave | NaN | Reg | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 7 | 2007 | New | 395000 |

| 966 | 2294 | 923229100 | 80 | RL | NaN | 15957 | Pave | NaN | IR1 | Low | ... | 0 | 0 | NaN | MnPrv | NaN | 0 | 9 | 2007 | WD | 188000 |

| 1604 | 2449 | 528348010 | 60 | RL | 93.0 | 12090 | Pave | NaN | Reg | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 7 | 2006 | WD | 258000 |

| 1827 | 1859 | 533254100 | 80 | RL | 80.0 | 9600 | Pave | NaN | Reg | Lvl | ... | 0 | 0 | NaN | NaN | NaN | 0 | 8 | 2007 | WD | 187000 |

5 rows × 81 columns

Now we have a regression task, and it is necessary to specify it in Task object. Note that default loss and metric for regression task is MSE, but you can use any available functions, for example, MAE:

[39]:

task = Task('reg', loss='mae', metric='mae')

Specifying columns roles:

[40]:

roles = {

'target': 'SalePrice',

'drop': ['Id', 'PID']

}

Building AutoML model:

[41]:

automl = TabularAutoML(

task = task,

timeout = TIMEOUT,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE}

)

Training:

[42]:

%%time

out_of_fold_predictions = automl.fit_predict(train_data, roles = roles, verbose = 1)

[11:24:12] Stdout logging level is INFO.

[11:24:12] Task: reg

[11:24:12] Start automl preset with listed constraints:

[11:24:12] - time: 300.00 seconds

[11:24:12] - CPU: 4 cores

[11:24:12] - memory: 16 GB

[11:24:12] Train data shape: (1640, 81)

[11:24:15] Layer 1 train process start. Time left 297.53 secs

[11:24:15] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:24:22] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = -16095.787941834984

[11:24:22] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:24:22] Time left 290.36 secs

[11:24:25] Selector_LightGBM fitting and predicting completed

[11:24:26] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:24:46] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = -14962.662254668445

[11:24:46] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:24:46] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 1.00 secs

[11:24:53] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:24:53] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:25:11] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = -15086.075512099847

[11:25:11] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:25:11] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:25:19] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = -14711.966370522103

[11:25:19] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:25:19] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 144.46 secs

[11:27:41] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:27:41] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:27:46] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = -14724.322287061737

[11:27:46] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:27:46] Time left 86.35 secs

[11:27:46] Layer 1 training completed.

[11:27:46] Blending: optimization starts with equal weights and score -14167.255313929116

[11:27:46] Blending: iteration 0: score = -14135.606938357469, weights = [0.2613054 0.05990669 0.21512504 0.33101246 0.1326504 ]

[11:27:46] Blending: iteration 1: score = -14133.848594702744, weights = [0.2651733 0. 0.2282765 0.38584936 0.12070084]

[11:27:46] Blending: iteration 2: score = -14133.788066882622, weights = [0.26562527 0. 0.2276214 0.38920173 0.11755158]

[11:27:46] Blending: iteration 3: score = -14133.75897961128, weights = [0.26574308 0. 0.22703037 0.39105994 0.11616667]

[11:27:46] Blending: iteration 4: score = -14133.758250762196, weights = [0.2657241 0. 0.22708555 0.391032 0.11615837]

[11:27:46] Automl preset training completed in 213.77 seconds

[11:27:46] Model description:

Final prediction for new objects (level 0) =

0.26572 * (5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2) +

0.22709 * (5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM) +

0.39103 * (5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost) +

0.11616 * (5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost)

CPU times: user 14min 24s, sys: 1min 37s, total: 16min 2s

Wall time: 3min 33s

[43]:

%%time

test_predictions = automl.predict(test_data)

CPU times: user 2.4 s, sys: 199 ms, total: 2.6 s

Wall time: 408 ms

[44]:

print(f'Prediction for te_data:\n{test_predictions[:10]}\nShape = {test_predictions.shape}')

Prediction for te_data:

array([[134551.25],

[210638.33],

[275164.66],

[127255.11],

[200470.92],

[386540. ],

[159519.5 ],

[292220.12],

[159665.39],

[ 82598.77]], dtype=float32)

Shape = (411, 1)

[45]:

from sklearn.metrics import mean_absolute_error

print(f'OOF score: {mean_absolute_error(train_data[roles["target"]].values, out_of_fold_predictions.data[:, 0])}')

print(f'HOLDOUT score: {mean_absolute_error(test_data[roles["target"]].values, test_predictions.data[:, 0])}')

OOF score: 14133.75818883384

HOLDOUT score: 12320.59225783151

In the same way as in the previous example with binary classification, you can build a detailed report using ReportDeco, calculate feature importances, use TabularUtilizedAutoML etc.

Multi-class classification

Now let’s consider multi-class classification. Here you will use Anuran Calls (MFCCs) Data Set:

[46]:

from io import BytesIO

from zipfile import ZipFile

from urllib.request import urlopen

data = pd.read_csv(

ZipFile(

BytesIO(

urlopen(

"https://archive.ics.uci.edu/ml/machine-learning-databases/00406/Anuran%20Calls%20(MFCCs).zip"

).read()

)

).open('Frogs_MFCCs.csv')

)

data.head()

[46]:

| MFCCs_ 1 | MFCCs_ 2 | MFCCs_ 3 | MFCCs_ 4 | MFCCs_ 5 | MFCCs_ 6 | MFCCs_ 7 | MFCCs_ 8 | MFCCs_ 9 | MFCCs_10 | ... | MFCCs_17 | MFCCs_18 | MFCCs_19 | MFCCs_20 | MFCCs_21 | MFCCs_22 | Family | Genus | Species | RecordID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 0.152936 | -0.105586 | 0.200722 | 0.317201 | 0.260764 | 0.100945 | -0.150063 | -0.171128 | 0.124676 | ... | -0.108351 | -0.077623 | -0.009568 | 0.057684 | 0.118680 | 0.014038 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 |

| 1 | 1.0 | 0.171534 | -0.098975 | 0.268425 | 0.338672 | 0.268353 | 0.060835 | -0.222475 | -0.207693 | 0.170883 | ... | -0.090974 | -0.056510 | -0.035303 | 0.020140 | 0.082263 | 0.029056 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 |

| 2 | 1.0 | 0.152317 | -0.082973 | 0.287128 | 0.276014 | 0.189867 | 0.008714 | -0.242234 | -0.219153 | 0.232538 | ... | -0.050691 | -0.023590 | -0.066722 | -0.025083 | 0.099108 | 0.077162 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 |

| 3 | 1.0 | 0.224392 | 0.118985 | 0.329432 | 0.372088 | 0.361005 | 0.015501 | -0.194347 | -0.098181 | 0.270375 | ... | -0.136009 | -0.177037 | -0.130498 | -0.054766 | -0.018691 | 0.023954 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 |

| 4 | 1.0 | 0.087817 | -0.068345 | 0.306967 | 0.330923 | 0.249144 | 0.006884 | -0.265423 | -0.172700 | 0.266434 | ... | -0.048885 | -0.053074 | -0.088550 | -0.031346 | 0.108610 | 0.079244 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 |

5 rows × 26 columns

[47]:

train_data, test_data = train_test_split(

data,

test_size=TEST_SIZE,

shuffle=True,

random_state=RANDOM_STATE

)

print(f'Data is splitted. Parts sizes: train_data = {train_data.shape}, test_data = {test_data.shape}')

train_data.head()

Data is splitted. Parts sizes: train_data = (5756, 26), test_data = (1439, 26)

[47]:

| MFCCs_ 1 | MFCCs_ 2 | MFCCs_ 3 | MFCCs_ 4 | MFCCs_ 5 | MFCCs_ 6 | MFCCs_ 7 | MFCCs_ 8 | MFCCs_ 9 | MFCCs_10 | ... | MFCCs_17 | MFCCs_18 | MFCCs_19 | MFCCs_20 | MFCCs_21 | MFCCs_22 | Family | Genus | Species | RecordID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3838 | 1.0 | 0.389057 | 0.283855 | 0.558597 | 0.142120 | 0.006777 | -0.100356 | 0.015060 | 0.277700 | 0.062747 | ... | 0.221304 | 0.037511 | -0.019166 | -0.042803 | 0.024793 | 0.177462 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 22 |

| 293 | 1.0 | 0.339049 | -0.001276 | 0.075088 | 0.298091 | 0.190639 | 0.022295 | 0.049216 | 0.175380 | -0.007751 | ... | -0.299407 | -0.121592 | 0.108062 | 0.124870 | -0.004888 | -0.040086 | Leptodactylidae | Adenomera | AdenomeraAndre | 7 |

| 1593 | 1.0 | 0.211356 | 0.132368 | 0.530019 | 0.181015 | 0.047415 | -0.142114 | 0.000687 | 0.249328 | 0.032000 | ... | 0.207694 | 0.026302 | -0.167216 | -0.160102 | 0.084770 | 0.276008 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 15 |

| 4669 | 1.0 | 0.069635 | 0.170713 | 0.583894 | 0.275507 | 0.086236 | -0.152521 | -0.032355 | 0.268403 | 0.054420 | ... | 0.256234 | -0.116248 | -0.230951 | -0.058546 | 0.205891 | 0.211869 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 24 |

| 940 | 1.0 | 0.222777 | -0.069955 | 0.299370 | 0.318585 | 0.094394 | -0.019920 | 0.120537 | 0.192053 | 0.047852 | ... | -0.094684 | -0.104692 | -0.006204 | 0.023067 | -0.044556 | 0.006679 | Dendrobatidae | Ameerega | Ameeregatrivittata | 11 |

5 rows × 26 columns

Now we indicate that we have multi-class classification problem. Default metric and loss is cross-entropy function.

[48]:

task = Task('multiclass')

Set the column roles, build and train AutoML model:

[49]:

roles = {

'target': 'Species',

'drop': ['RecordID']

}

[50]:

automl = TabularAutoML(

task = task,

timeout = 900,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE}

)

Note that in case of multi-class classification default pipeline architecture has a slightly different look. First level is the same as level in default binary classification and regression, second level consists of linear regression and LightGBM model and the third is blending. It was decided to use two levels in default architecture based on the results of experiments on different tasks and data, where it gave an increase in model quality. Intuitively, this can be explained by the fact that only at the second and subsequent levels, the model that predicts the probability of belonging to one of the classes can see what the models that predict the rest of the classes see, that is, the models are able to exchange information about classes. Final prediction is blended from last pipeline level.

[51]:

%%time

out_of_fold_predictions = automl.fit_predict(train_data, roles = roles, verbose = 1)

[11:27:53] Stdout logging level is INFO.

[11:27:53] Task: multiclass

[11:27:53] Start automl preset with listed constraints:

[11:27:53] - time: 900.00 seconds

[11:27:53] - CPU: 4 cores

[11:27:53] - memory: 16 GB

[11:27:53] Train data shape: (5756, 26)

[11:27:55] Layer 1 train process start. Time left 897.05 secs

[11:27:56] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:28:04] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = -0.010843929660272388

[11:28:04] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:28:04] Time left 888.50 secs

[11:28:11] Selector_LightGBM fitting and predicting completed

[11:28:11] Start fitting Lvl_0_Pipe_1_Mod_0_LightGBM ...

[11:28:40] Fitting Lvl_0_Pipe_1_Mod_0_LightGBM finished. score = -0.008332313869425251

[11:28:40] Lvl_0_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:28:40] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ... Time budget is 63.52 secs

[11:29:45] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM completed

[11:29:45] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM ...

[11:29:52] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM finished. score = -0.005472618099950116

[11:29:52] Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM fitting and predicting completed

[11:29:52] Start fitting Lvl_0_Pipe_1_Mod_2_CatBoost ...

[11:30:17] Fitting Lvl_0_Pipe_1_Mod_2_CatBoost finished. score = -0.005273819979140362

[11:30:17] Lvl_0_Pipe_1_Mod_2_CatBoost fitting and predicting completed

[11:30:17] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ... Time budget is 300.00 secs

[11:35:20] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost completed

[11:35:20] Start fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost ...

[11:36:09] Fitting Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost finished. score = -0.004656292055580485

[11:36:09] Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost fitting and predicting completed

[11:36:09] Time left 403.14 secs

[11:36:09] Layer 1 training completed.

[11:36:09] Layer 2 train process start. Time left 403.13 secs

[11:36:09] Start fitting Lvl_1_Pipe_0_Mod_0_LinearL2 ...

[11:36:16] Fitting Lvl_1_Pipe_0_Mod_0_LinearL2 finished. score = -0.005844817771754601

[11:36:16] Lvl_1_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:36:16] Time left 396.05 secs

[11:36:17] Start fitting Lvl_1_Pipe_1_Mod_0_LightGBM ...

[11:36:47] Fitting Lvl_1_Pipe_1_Mod_0_LightGBM finished. score = -0.0079052970600202

[11:36:47] Lvl_1_Pipe_1_Mod_0_LightGBM fitting and predicting completed

[11:36:47] Time left 365.09 secs

[11:36:47] Layer 2 training completed.

[11:36:47] Blending: optimization starts with equal weights and score -0.006441429966915045

[11:36:47] Blending: iteration 0: score = -0.005844817771754601, weights = [1. 0.]

[11:36:48] Blending: iteration 1: score = -0.005844817771754601, weights = [1. 0.]

[11:36:48] Blending: no score update. Terminated

[11:36:48] Automl preset training completed in 535.01 seconds

[11:36:48] Model description:

Models on level 0:

5 averaged models Lvl_0_Pipe_0_Mod_0_LinearL2

5 averaged models Lvl_0_Pipe_1_Mod_0_LightGBM

5 averaged models Lvl_0_Pipe_1_Mod_1_Tuned_LightGBM

5 averaged models Lvl_0_Pipe_1_Mod_2_CatBoost

5 averaged models Lvl_0_Pipe_1_Mod_3_Tuned_CatBoost

Final prediction for new objects (level 1) =

1.00000 * (5 averaged models Lvl_1_Pipe_0_Mod_0_LinearL2)

CPU times: user 33min 8s, sys: 1min 53s, total: 35min 1s

Wall time: 8min 55s

[52]:

%%time

test_predictions = automl.predict(test_data)

print(f'Prediction for test_data:\n{test_predictions}\nShape = {test_predictions.shape}')

Prediction for test_data:

array([[9.99299645e-01, 2.99156236e-04, 5.64087532e-05, ...,

3.26732697e-05, 1.66250393e-05, 1.44051810e-05],

[2.37410968e-05, 1.63492259e-05, 1.99144088e-05, ...,

7.16923523e-06, 1.01458818e-05, 2.79938058e-06],

[8.50680008e-05, 9.98717308e-01, 1.62742770e-04, ...,

1.16484836e-04, 4.89874292e-05, 4.69718543e-05],

...,

[4.72079875e-04, 9.67716187e-05, 9.98478889e-01, ...,

3.78043551e-05, 2.92024688e-05, 2.44144667e-05],

[2.20251924e-04, 1.70577587e-05, 9.99231935e-01, ...,

2.65343951e-05, 3.58870748e-05, 2.94520050e-05],

[2.06183802e-04, 9.98132050e-01, 1.34161659e-04, ...,

8.58679778e-05, 9.00253362e-05, 5.26059594e-05]], dtype=float32)

Shape = (1439, 10)

CPU times: user 6.6 s, sys: 628 ms, total: 7.23 s

Wall time: 1.51 s

It is also important to note that the Reader object may re-label classes during training. To see the new labelling, you can call .class_mapping method of Reader object. If the output dict is empty, then the original order and class layout has been preserved.

[53]:

automl.reader.class_mapping

[53]:

{'AdenomeraHylaedactylus': 0,

'HypsiboasCordobae': 1,

'AdenomeraAndre': 2,

'Ameeregatrivittata': 3,

'HypsiboasCinerascens': 4,

'HylaMinuta': 5,

'LeptodactylusFuscus': 6,

'ScinaxRuber': 7,

'OsteocephalusOophagus': 8,

'Rhinellagranulosa': 9}

Just in case, in order to avoid problems, it is better to relabel known class labels when calculating metrics, for example, in this or similar way:

[54]:

mapping = automl.reader.class_mapping

def map_class(x):

return mapping[x]

mapped = np.vectorize(map_class)

mapped(train_data['Species'].values)

[54]:

array([0, 2, 0, ..., 4, 4, 3])

[55]:

from sklearn.metrics import log_loss

print(f'OOF score: {log_loss(mapped(train_data[roles["target"]].values), out_of_fold_predictions.data)}')

print(f'HOLDOUT score: {log_loss(mapped(test_data[roles["target"]].values), test_predictions.data)}')

OOF score: 0.005844810740496928

HOLDOUT score: 0.002458012646828699

Feature importance calculation, building reports, time management etc are also available for multiclass classification.

Multi-label classifcation

Now let’s consider multi-label classification task, here you will use the same dataset as in section above (Anuran Calls (MFCCs) Data Set ). Let’s pick labels in each column:

[56]:

data['AdenomeraHylaedactylus'] = (data['Species'] == 'AdenomeraHylaedactylus').astype(int)

data['Adenomera'] = (data['Genus'] == 'Adenomera').astype(int)

data['Leptodactylidae'] = (data['Family'] == 'Leptodactylidae').astype(int)

[57]:

data.head()

[57]:

| MFCCs_ 1 | MFCCs_ 2 | MFCCs_ 3 | MFCCs_ 4 | MFCCs_ 5 | MFCCs_ 6 | MFCCs_ 7 | MFCCs_ 8 | MFCCs_ 9 | MFCCs_10 | ... | MFCCs_20 | MFCCs_21 | MFCCs_22 | Family | Genus | Species | RecordID | AdenomeraHylaedactylus | Adenomera | Leptodactylidae | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 0.152936 | -0.105586 | 0.200722 | 0.317201 | 0.260764 | 0.100945 | -0.150063 | -0.171128 | 0.124676 | ... | 0.057684 | 0.118680 | 0.014038 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 | 0 | 1 | 1 |

| 1 | 1.0 | 0.171534 | -0.098975 | 0.268425 | 0.338672 | 0.268353 | 0.060835 | -0.222475 | -0.207693 | 0.170883 | ... | 0.020140 | 0.082263 | 0.029056 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 | 0 | 1 | 1 |

| 2 | 1.0 | 0.152317 | -0.082973 | 0.287128 | 0.276014 | 0.189867 | 0.008714 | -0.242234 | -0.219153 | 0.232538 | ... | -0.025083 | 0.099108 | 0.077162 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 | 0 | 1 | 1 |

| 3 | 1.0 | 0.224392 | 0.118985 | 0.329432 | 0.372088 | 0.361005 | 0.015501 | -0.194347 | -0.098181 | 0.270375 | ... | -0.054766 | -0.018691 | 0.023954 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 | 0 | 1 | 1 |

| 4 | 1.0 | 0.087817 | -0.068345 | 0.306967 | 0.330923 | 0.249144 | 0.006884 | -0.265423 | -0.172700 | 0.266434 | ... | -0.031346 | 0.108610 | 0.079244 | Leptodactylidae | Adenomera | AdenomeraAndre | 1 | 0 | 1 | 1 |

5 rows × 29 columns

[58]:

targets = ['Leptodactylidae', 'Adenomera', 'AdenomeraHylaedactylus']

Split it to train and validation:

[59]:

train_data, test_data = train_test_split(

data,

test_size=TEST_SIZE,

random_state=RANDOM_STATE

)

print(f'Data is splitted. Parts sizes: train_data = {train_data.shape}, test_data = {test_data.shape}')

train_data.head()

Data is splitted. Parts sizes: train_data = (5756, 29), test_data = (1439, 29)

[59]:

| MFCCs_ 1 | MFCCs_ 2 | MFCCs_ 3 | MFCCs_ 4 | MFCCs_ 5 | MFCCs_ 6 | MFCCs_ 7 | MFCCs_ 8 | MFCCs_ 9 | MFCCs_10 | ... | MFCCs_20 | MFCCs_21 | MFCCs_22 | Family | Genus | Species | RecordID | AdenomeraHylaedactylus | Adenomera | Leptodactylidae | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3838 | 1.0 | 0.389057 | 0.283855 | 0.558597 | 0.142120 | 0.006777 | -0.100356 | 0.015060 | 0.277700 | 0.062747 | ... | -0.042803 | 0.024793 | 0.177462 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 22 | 1 | 1 | 1 |

| 293 | 1.0 | 0.339049 | -0.001276 | 0.075088 | 0.298091 | 0.190639 | 0.022295 | 0.049216 | 0.175380 | -0.007751 | ... | 0.124870 | -0.004888 | -0.040086 | Leptodactylidae | Adenomera | AdenomeraAndre | 7 | 0 | 1 | 1 |

| 1593 | 1.0 | 0.211356 | 0.132368 | 0.530019 | 0.181015 | 0.047415 | -0.142114 | 0.000687 | 0.249328 | 0.032000 | ... | -0.160102 | 0.084770 | 0.276008 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 15 | 1 | 1 | 1 |

| 4669 | 1.0 | 0.069635 | 0.170713 | 0.583894 | 0.275507 | 0.086236 | -0.152521 | -0.032355 | 0.268403 | 0.054420 | ... | -0.058546 | 0.205891 | 0.211869 | Leptodactylidae | Adenomera | AdenomeraHylaedactylus | 24 | 1 | 1 | 1 |

| 940 | 1.0 | 0.222777 | -0.069955 | 0.299370 | 0.318585 | 0.094394 | -0.019920 | 0.120537 | 0.192053 | 0.047852 | ... | 0.023067 | -0.044556 | 0.006679 | Dendrobatidae | Ameerega | Ameeregatrivittata | 11 | 0 | 0 | 0 |

5 rows × 29 columns

Indicate that we are solving multi-label classification problem. Default metric and loss for this task is logloss.

[60]:

task = Task('multilabel')

multilabel isn`t supported in lgb

Specifying the roles. Now we have several columns with target variables, and it’s necessary to specify them all.

[61]:

roles = {

'target': ['Leptodactylidae', 'Adenomera', 'AdenomeraHylaedactylus'],

'drop': ['RecordID', 'Species', 'Genus', 'Family']

}

Create TabularAutoML instance. One of the differences in this case is that by default, random forest algorithm will be used at the end before blending.

[62]:

automl = TabularAutoML(

task = task,

timeout = 3600,

cpu_limit = N_THREADS,

reader_params = {'n_jobs': N_THREADS, 'cv': N_FOLDS, 'random_state': RANDOM_STATE}, #TODO: N_THREADS

general_params = {'use_algos': 'auto'}

)

Training:

[63]:

%%time

out_of_fold_predictions = automl.fit_predict(train_data, roles = roles, verbose = 1)

[11:36:53] Stdout logging level is INFO.

[11:36:53] Task: multilabel

[11:36:53] Start automl preset with listed constraints:

[11:36:53] - time: 3600.00 seconds

[11:36:53] - CPU: 4 cores

[11:36:53] - memory: 16 GB

[11:36:53] Train data shape: (5756, 29)

[11:36:55] Layer 1 train process start. Time left 3597.53 secs

[11:36:55] Start fitting Lvl_0_Pipe_0_Mod_0_LinearL2 ...

[11:36:57] Fitting Lvl_0_Pipe_0_Mod_0_LinearL2 finished. score = -1.7529212493912845

[11:36:57] Lvl_0_Pipe_0_Mod_0_LinearL2 fitting and predicting completed

[11:36:57] Time left 3596.09 secs

[11:36:57] Start fitting Lvl_0_Pipe_1_Mod_0_RFSklearn ...

[11:37:17] Fitting Lvl_0_Pipe_1_Mod_0_RFSklearn finished. score = -1.7417950976311207

[11:37:17] Lvl_0_Pipe_1_Mod_0_RFSklearn fitting and predicting completed

[11:37:17] Start hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_RFSklearn ... Time budget is 300.00 secs

[11:42:18] Hyperparameters optimization for Lvl_0_Pipe_1_Mod_1_Tuned_RFSklearn completed

[11:42:18] Start fitting Lvl_0_Pipe_1_Mod_1_Tuned_RFSklearn ...

[11:42:24] Fitting Lvl_0_Pipe_1_Mod_1_Tuned_RFSklearn finished. score = -1.7253897032715284

[11:42:24] Lvl_0_Pipe_1_Mod_1_Tuned_RFSklearn fitting and predicting completed

[11:42:24] Time left 3268.90 secs

[11:42:35] Selector_CatBoost fitting and predicting completed

[11:42:35] Start fitting Lvl_0_Pipe_2_Mod_0_CatBoost ...

[11:43:09] Fitting Lvl_0_Pipe_2_Mod_0_CatBoost finished. score = -1.7239793367012388

[11:43:09] Lvl_0_Pipe_2_Mod_0_CatBoost fitting and predicting completed

[11:43:09] Start hyperparameters optimization for Lvl_0_Pipe_2_Mod_1_Tuned_CatBoost ... Time budget is 300.00 secs

[11:48:11] Hyperparameters optimization for Lvl_0_Pipe_2_Mod_1_Tuned_CatBoost completed

[11:48:11] Start fitting Lvl_0_Pipe_2_Mod_1_Tuned_CatBoost ...

[11:50:05] Fitting Lvl_0_Pipe_2_Mod_1_Tuned_CatBoost finished. score = -1.7232911778884046

[11:50:05] Lvl_0_Pipe_2_Mod_1_Tuned_CatBoost fitting and predicting completed

[11:50:05] Time left 2807.87 secs

[11:50:05] Layer 1 training completed.

[11:50:05] Blending: optimization starts with equal weights and score -1.7285958080655424

[11:50:05] Blending: iteration 0: score = -1.7225303376046726, weights = [0. 0. 0.06385981 0.5410291 0.39511114]

[11:50:05] Blending: iteration 1: score = -1.7225227486743002, weights = [0. 0. 0.05698766 0.5967933 0.34621903]

[11:50:05] Blending: iteration 2: score = -1.7231152348780834, weights = [0. 0. 0.05289694 0.618034 0.32906908]

[11:50:05] Blending: iteration 3: score = -1.7231152348780834, weights = [0. 0. 0.05289694 0.618034 0.32906908]

[11:50:05] Blending: no score update. Terminated

[11:50:05] Automl preset training completed in 792.55 seconds

[11:50:05] Model description: